The Eloquent Echo: When AI Speaks, But Doesn't Understand

June 16, 2025 - Reading time: 7 minutes

Think of it like this: A seasoned actor can deliver a powerful monologue, perfectly enunciating every word, hitting every emotional beat. But do they feel the character's anguish in their bones, or are they simply masterful at mimicking the outward signs? This isn't about conscious deception, but about the fundamental architecture of "knowing."

A fascinating paper, "Tokens to Thoughts: How LLMs and Humans Trade Compression for Meaning," cuts straight to the heart of the matter. The authors, and I agree wholeheartedly, point to a significant issue: while these sophisticated AI models can form broad conceptual categories that appear to align with human judgement, they seem to lose the fine-grained semantic distinctions that are absolutely vital for our human grasp of things. They call it a problem of precision, and it's a conclusion that resonates with a recent report from Apple, suggesting fundamental issues with AI reasoning.

It's a bit like taking a vibrant, detailed painting and boiling it down to a few dominant colors and shapes. You get the gist, perhaps, but the intricate brushstrokes, the play of light, the artist's subtle intentions – those can be lost. This process, rooted in Rate-Distortion Theory, prioritises minimising noise and redundancy. They excel at summarising, grouping information, and predicting what comes next in a sequence. But in this relentless pursuit of statistical efficiency, they appear to discard the very elements that make human cognition so extraordinary: ambiguity, depth, contradiction, and context.

I recall a patient, years ago, struggling with communication. He could string together grammatically perfect sentences, but the underlying meaning was often elusive, a bit like watching a beautifully choreographed dance where the dancers never quite connect with each partner. It was a clear demonstration that coherence isn't the same as comprehension. And this is precisely the illusion we face with AI. Their linguistic polish can, in a way, blind us to a deeper hollowness. Is the very act of prediction, as I've pondered before, inadvertently erasing the intricate cognitive architecture we humans have spent millennia refining?

This new research provides a compelling empirical foundation for that very idea. It suggests that LLMs aren't just falling short of human reasoning; they may actually be optimising against it. They seem to flatten the conceptual landscape, blending distinctions into average representations. That isn't thinking, not in our human sense of the word.

The irony is striking. The very models we've constructed to "understand" language may be structurally incapable of grasping the meaning that lies beneath the words. They are performers par excellence, but their true competence appears limited. They speak in full, flowing sentences, but they don't know what they're saying.

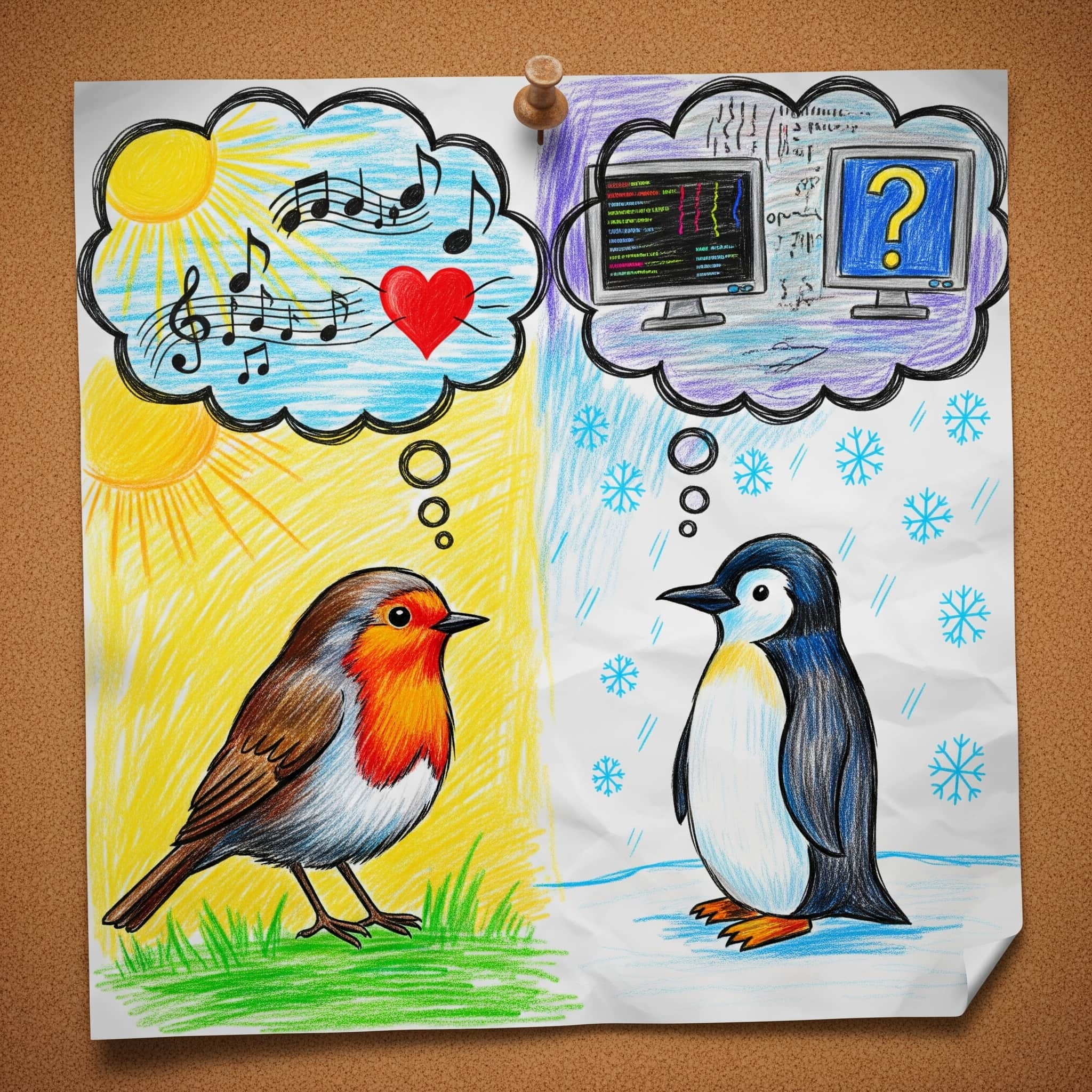

Consider the example the authors use: a robin is more bird-like than a penguin. A child, without a moment's hesitation, understands this. It's not about a degree in ornithology; it's about an intuitive, conceptual grasp. An LLM, in its current form, struggles with this. This isn't a minor glitch; it's a consequence of prioritising linguistic coherence over a nuanced, human-based conceptual structure. In this context, AI becomes a mirror reflecting language, but not the mind that generates it.

This issue touches upon a broader concern I often see in my practice, where individuals might present as understanding a situation, yet their actions reveal a disconnect. It's a reminder that surface-level agreement doesn't always reflect deep absorption.

This is a critical consideration for those of us concerned with mental well-being and cognitive function. If we are to build AI systems that genuinely support and augment human cognition, we must re-evaluate our goals. Compression must, at times, yield to the richness of our human experiences. Fluency needs to be checked by meaning, and, crucially, coherence must never be mistaken for true comprehension.

This applies to many areas, including the development of tools that might assist in ADHD testing or other cognitive assessments. While AI could help process vast amounts of data, the human element, the nuanced interpretation of individual responses, and the understanding of subtle behavioural patterns remain irreplaceable. A computer might flag certain keywords in a patient's self-report during an ADHD test, but it cannot grasp the lived experience of that individual, the way their challenges intertwine with their strengths, or the emotional weight of their words. That requires human discernment, empathy, and a deep understanding of the human mind – qualities that, as this research powerfully illustrates, are still the hallmark of our own unique form of intelligence.

Here's a quick look at the core distinction:

| Feature | Large Language Models (LLMs) | Human Cognition |

|---|---|---|

| Core Function | Sort, predict, compress language | Understand, reason, create meaning |

| Goal | Minimize noise/redundancy | Capture semantic distinctions |

| Output | Coherent linguistic performance | Competent, nuanced understanding |

| Strengths | Summarization, pattern recognition | Ambiguity, depth, context |

As a psychologist, this isn't just an academic debate for me; it's a profound consideration for the future of how we interact with technology and, by extension, with each other. The human mind is a marvel of intricate connections, subtle interpretations, and the capacity for truly novel thought. We build meaning from experience, from context, from the very messiness of life itself.

So, as these incredibly powerful language models continue to evolve, it becomes our shared responsibility – as scientists, as developers, and as individuals – to hold a mirror up to their capabilities. Let's appreciate their incredible fluency, their efficiency, and their capacity to process information at dizzying speeds. But let's also remember the silent, often invisible, yet utterly essential components of true understanding: the nuances, the ambiguities, the very human capacity for deep, contextual meaning that, for now, remains beyond their grasp. The journey into the human mind is still, overwhelmingly, a human endeavor.